Imagine the internet is a library with millions of books. Traditionally, search engines use “robots.txt” to know which aisles they can and cannot enter, and “sitemaps.xml” to get a list of all the books. But these are for machines to understand how to crawl the site.

What is llms.txt?

“llms.txt” (in simple words) is like a special, human-readable guidebook for Large Language Models (LLMs), like ChatGPT or Gemini. It’s a text file, usually in Markdown format, that you place on your website to tell these AI models:

- What your website is about: A concise summary of your content.

- Which parts are most important: Links to your key documentation, product pages, policies, or cornerstone articles.

- How they can use your content: Whether they are allowed to use it for training, if attribution is required, or if certain sections are restricted.

Think of it as a curated map for AI, designed to help LLMs quickly understand the most valuable and relevant information on your site, instead of having to “guess” by sifting through all the complex code and various elements of a webpage.

Ways “llms.txt” Can Be Used

- Improved AI Understanding and Responses:

- Accurate Summaries: LLMs can generate more precise summaries of your content, as they’re guided to the most important parts.

- Better Q&A: When users ask AI questions about your business or content, the LLM can provide more accurate and relevant answers by referencing your llms.txt file.

- Enhanced Context: It helps LLMs understand the relationships between different pieces of content on your site, leading to more contextually aware responses.

- Generative Engine Optimization (GEO):

- AI Visibility: Just like SEO helps you rank in traditional search, llms.txt aims to improve your visibility in AI-powered search results and chatbots.

- Accurate Citations: You can guide AI models to cite your website as the source for information, potentially driving referral traffic back to your site.

- Content Control: It offers content owners a way to specify how their data should be used by AI models, addressing concerns about data privacy and usage.

- Developer Documentation & APIs:

- For platforms with extensive documentation, llms.txt (or its more comprehensive cousin, llms-full.txt, which includes all content directly) can provide a structured, easily digestible version of their API docs for AI agents and code editors. This allows developers to use AI tools that can directly understand and interact with their APIs.

What is the future of “llms.txt”?

llms.txt is still evolving, as it’s a relatively new proposed standard. Here’s what we might see:

- Increased Adoption: As AI-powered search and assistants become more prevalent, more websites will likely adopt llms.txt to ensure their content is properly understood and used by LLMs.

- Standardization: While currently a “proposed” standard, there might be a move towards official accreditation by web standards bodies (like W3C) if major LLM providers fully commit to it.

- Sophisticated AI Interaction: We could see LLMs moving beyond just reading content to directly interacting with products and services through standardized protocols, potentially leveraging information from llms.txt.

- Enhanced Analytics: LLM providers might offer better analytics to show website owners how their llms.txt files are being used and how much traffic or engagement they’re generating from AI.

- Automatic Generation: Tools for automatically generating and updating llms.txt files will become more common, making it easier for website owners to implement.

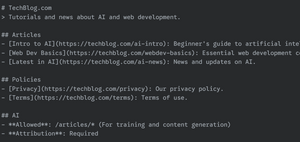

Example to Implement “llms.txt”

Here’s a simplified example of what an llms.txt file might look like for a hypothetical tech blog:

Implementation Steps (General):

- Create the file: Create a new text file named llms.txt (and optionally llms-full.txt).

- Write content in Markdown: Populate the file with structured information about your website using Markdown syntax (headings, lists, links, blockquotes).

- Host it at your root directory: Upload the llms.txt file to the root directory of your website (e.g., https://www.yourwebsite.com/llms.txt).

- Reference Markdown files: If using llms.txt (not llms-full.txt), ensure the linked content (e.g., /docs/ai-basics.md) is also available in Markdown format.

- Test and validate: Regularly review and update your llms.txt file to reflect changes on your website and ensure it’s accurately guiding AI models.

“llms.txt” is a straightforward yet powerful tool that acts as a guide for AI models, helping them understand, prioritize, and appropriately use content from websites.

By providing a clear, structured overview of a site’s most important information and usage policies, llms.txt enables more accurate AI responses, improves content visibility in AI-powered search, and offers content owners greater control over their digital assets in the evolving landscape of artificial intelligence. It’s a small file with a big impact on how your content interacts with the AI-driven web.